It’s nearing the end of the school day. Several students watch the clock anxiously, waiting for the bell to ring. As the blaring tone echoes across the school, the teacher reminds everyone over the rising cacophony of students hurrying to leave that they all have several online modules to complete before midnight tonight and a practice online test due next week. For most of the students, going online and spending the necessary thirty minutes to an hour on the modules should be no problem (aside from slight annoyance over having homework that day). However, for some of the kids in the class being able to get on the internet and finish their homework from six to seven classes every night is a near-impossible task. I talked with one of these students in depth about their internet access dilemma (for anonymity, I am calling him Matthew). At home, Matthew’s parents live from paycheck to paycheck and cannot afford the cost of buying either a computer or monthly internet coverage. Several options remain for Matthew: waiting for a thirty-minute computer session at a town library several towns over with over forty people in front of him, standing outside a free wi-fi hotspot at a nearby restaurant stalled by every connection drop, or borrowing a computer from a compassionate classmate. In school, he finds himself constantly turning in his assignments late and lags behind his peers in learning and research due to the lack of internet connectivity. Though Matthew’s life goal is to become a biologist, as time passes, this goal has grown increasingly more difficult. When it is time for college applications, Matthew may not have good enough grades to get into a good university in the major he wants. Students like Matthew are put at a disadvantage, lagging behind in grades and career opportunities simply because they lack easy access to technology.

The Digital Revolution

Over the past few decades, the ubiquity of computers and internet access has encompassed the majority of western civilization. At the same time, the shift from paper to digital for everyday tasks over the last few years has transformed internet usage “[from] a luxury [to] a necessity” in the words of former President Obama (Knibbs). Yet despite the exponential growth of technology in the digital era, there exists an economic, educational, and social schism between those who have easy and unregulated access to the internet and those who do not. Dubbed the Digital Divide, this issue has remained largely unaddressed by both the general public and the government. With the futures of many at-risk students at stake, it is critical for society to gain a deeper understanding of the impact the Digital Divide has on education and formulate ways to combat digital inequity.

Technology Integration In Education - 21st Century Learning

The increasing integration of technology into education creates several challenges for at-risk students. Today, “seven in 10 teachers now [assign] homework that requires web access” (Kang). Incorporating technology heavily into an educational curriculum is becoming more of an educational standard to help prepare students for the real world. In fact, several schools have elected to “write our own digital textbooks” to be accessed by remotely connecting to the school’s network through the internet (Bendici). With the majority of homework being assigned through the cloud, some teachers expect all their students to have internet access. Students who are unable to meet their deadlines due to lack of tech not only lag behind in learning, but also suffer poor grades (Kang). These obstacles have forced low-income students to find time-consuming workarounds just to finish their daily assignments. For many low-income students, waiting in long lines to use a library computer or sitting on a public bus for hours using their phone, are the only choices they have, despite its impracticality.

Not All Schools are Technologically-Engaged Equally

Some believes that the Digital Divide in education can be addressed through action from local school districts and the surrounding community. However, for some school districts, it can be a successful initiative but for others, this can be a daunting task. For districts like the Mountain View-Los Altos District in the Silicon Valley, districts have pledged hundreds of thousands of dollars as well as partnered with major tech corporations such as Google to provide every student a Chromebook for use at home and during class as part of a new computer-based learning curriculum (“MVLA rolls out laptop integration” Newell). Other school districts such as South Fayette near Pittsburgh is actively working with nearby university Carnegie Mellon “to help develop its new computer science curriculum and train its teachers [and provide its students] access to some of the best minds in the region” (Herold). Though these programs are successful in promoting digital equity and preparing students for the future, they tend to be geographically focused and are only available to more affluent school districts or in close proximity to technological innovation.

Looking at how the Digital Divide affects modern-day schooling, two main problems exist for underfunded school districts across the US: “[a] lack of resources and problems in the community they serve” (Herold). For most of these schools, providing financial or ideological support to promote and develop tech-based learning (TBL) can be near impossible. Despite the federal E-Rate Program helping to “[provide] broadband to libraries and schools,” it remains a constant struggle for impoverished schools to avoid running out of money (Vick; Herold). The CEO of Innovative Educator Consulting Network (who help schools integrate technology into their curriculum) brings up the difficulty for schools in low-income areas to “prioritize and fix what’s most important” when everything is in a constant state of disrepair (Harm qtd. in Herold).

In the case of the Sto-Rox school district in Pittsburgh, ranked the 102nd best district out of 103 districts in the Allegheny County of Pennsylvania, now sends “20% of [the school’s] annual budget to charter schools” where many students in the Sto-Rox district have fled in search of better options (Herold). In the classroom, the Sto-Rox district struggles to get even a small portion of its students online during class; with 30-60 Chromebooks split among 1,300 students which “sat unused for more than a year…[because] the district didn’t have consistent funding” and faulty adapters for the dozens of interactive whiteboards that are too expensive to replace (Herold).

Though most school districts recognize the lasting effects of digital inequity, over 70 percent have not taken subsequent action often because they lack a “clear vision...about what learning should look like and why” as observed by the CEO of CoSN (a nonprofit comprised of K-12 technology leaders) Keith Krueger (Krueger qtd. in Bendici; Herold). This lack of clarity and focus on integrating TBL for at-risk students creates what has been dubbed an educational “vicious cycle” in which a lack of TBL engagement causes a lack of interest and vice versa. Technology commitment in education is key, a “lack of engagement...when educators do not practice inclusive strategies in their teaching,” and students feeling it “is not part of their self identity” creates further hurdles that perpetuate the divide (Subramony qtd. in Rogers; Rogers; Subramony qtd. in Rogers).

Even for schools who integrate technology into their curriculum, the teaching styles and levels of student engagement differs. For example, more-affluent schools have connected classroom learning to real-life problem solving by blending technology into project-based learning (PBL). Under this nontraditional PBL approach, students are coached to learn and leverage technology tools, from online research, collaboration using google hangouts or google docs to shooting video, iMovies for TED talks to problem-solve and present solutions. In contrast, “students from low socioeconomic backgrounds use computers in school differently from more affluent students” (Jornell). A recent study of schools comparing high and low socioeconomic areas in California found that “students in poorer schools use computers to make presentations of existing material while wealthier schools encourage students to research, edit papers, and perform statistical analyses” (Warschauer qtd. in Jornell).

Technology integration and engagement issues are commonalities for underfunded districts; yet at the same time, there are other evolving alternatives. Several impoverished school districts are implementing these new options to address both digital inequity and annual funding issues. These schools have elected to forego the costs of purchasing and maintaining hardcover textbooks for its tens-of-thousands of students switching to a newly developed digital curriculum (Bendici). Aligned with state standards and updated annually, the digital content allows for schools to purchase devices at a one-to-one ratio enabling all their students to access the internet at home.

Digital Equity = Equal Access to Computers + Free Broadband + Computer Literacy

In order to efficiently take action towards narrowing the Digital Divide, it is important to recognize that digital equality is not limited to equal access to computer technology and internet connectivity, but also computer literacy. Despite the more limited options available in poverty-stricken areas, there are many small actions that help provide relief to students in need. Through utilizing resources such as CoSN’s Digital Equity Action Toolkit, school districts can analyze student’s limitations in accessing the web to ultimately implement “low-cost, simple efforts to assist low-income families” (Bendici). Such actions could include distributing maps marking the locations of free Wi-Fi areas to students, coordinating with local corporations to set up free hotspots, or even municipal networks to reduce the overall cost of broadband coverage in an area though it should be noted however that in 20 states, cable companies have lobbied lawmakers to outright ban municipal networks (Vick).

Cathy Cox of the Academic Senate for California Community Colleges states “there are many reasons students lack the necessary computer literacy skills. One simple fact is that many students may not have access to computers in their homes” (Cox). In an effort to address this, Sunday Friends, a nonprofit in the Silicon Valley, is dedicated to helping low-income families with the Digital Divide by providing computer literacy classes and an opportunity to earn a computer. Several times a month, the nonprofit hosts STEM-related activities and computer education for students and families. Sunday Friends’ “Computer Education For Families” program stresses the value of computer literacy and advocates computer learning by both parents and children. Through this program, parents learn the necessary computer skills to help their children with homework, communicate with teachers via e-mail, and access school news online. The program also teaches basic, intermediate, and advanced computer and math skills classes, and awards students their own laptop upon completion of the nonprofit’s STEM curriculum. According to the nonprofit, they “ [recognize] that children who have positive experiences with STEM are more likely to apply themselves to learning STEM in school, which may lead to successful careers that build on STEM” (“LAHS Freshman seeks tech donations” Sunday Friends qtd. in Newell). Despite the numerous families that Sunday Friends have assisted with computer access and necessary computer literacy classes, the organization is unable to address the cost of internet access which remains too expensive for many at-risk families as many lack “steady jobs and are barely paying their rents” (Talati).

Broadband Expansion To Rural America

Compounding on this issue, in rural areas that lack pre-existing infrastructure “large internet service providers...struggle to make a return on their investment...given the lack of customer base” and the difficult nature of installing broadband and fiber-optic cables according to Gladys Palpallatoc of the California Emerging Technology Fund (Palpallatoc qtd. in Huval). Rob Blick, a computer programmer located in the Conotton Valley of Ohio “can understand why cable companies don’t want to...wire his neck of the woods” comparing broadband coverage to a “modern-day equivalent of the interstate highway system” (Blick qtd. in Vick). The lack of broadband access in less urban areas has led tech experts to adopt the mentality that internet access should be “like access to public roads. Today anyone who can walk, drive, or take a bus can [get to where they need to be] for free. For some it’s easier and for some it might be harder - but it’s available” (Talati). To address the issue of affordable internet connectivity, several small Internet Service Providers (ISP) including Cox Communications have offered “high-speed internet access for $9.95 per month to [students]...on free or reduced-price lunch” after negotiation with local school districts (Bendici). In cities such as Mountain View, presiding tech corporations have given $800,000 to expand free wireless networks for public use (Noack). Albeit the Wi-Fi capability being slow and unreliable, this is a step in the right direction for digital equity. Through the promotion of cheaper internet options and free alternatives, at-risk students can be provided the tools necessary to keep up with the rest of their classmates as well as the world around them.

More recently, several tech startups have started proposing solutions towards achieving internet coverage in low-income areas. One of these startups, SoftBank, plans to implement an industrial blimp that “will plug into the backbone of the internet, and then will be able to project a wireless network to customers at a range as big as 10,000 square kilometers” (Rogers) providing a stable and fast internet connection to anyone in range. On the other hand, the prospect of major tech corporations providing any sort of technological aid towards the Digital Divide has stagnated. Instead, companies like Google and Apple are electing to send “their philanthropy abroad” to countries like India because “they think it’s their new market” where they can drastically increase the number of people connected to the internet ultimately to sell and advertise their services (Palpallatoc qtd. in Huval).

Can The Government Close The Digital Divide?

While the community and school districts search for ways to engage students in technology, some believe that a precedent/legislation set by the US government could provide great momentum towards digital equity. Over the last few years however, digital inequity has transformed into a partisan topic resulting in a political stalemate. To better understand the standstill, it is important to understand the history of federal aid programs for technology and internet access. One of the first major broadband programs, the ConnectHome program, was enacted by then-president Obama in 2015 to address the Digital Divide. This program partnered with Google to provide “free home internet access... in its twelve Google Fiber markets…[serving] 275,000 low-income homes in 27 cities” (Knibbs). At the time though, Democrats and Republicans were divided on the right way to provide aid with Democrats supporting federal grants and loans, while Republicans were reluctant to authorize such large amounts of cash that would “prop up new companies to compete with existing internet providers” (Romm). Other programs such as the California Advanced Services Fund (CASF), Lifeline, E-Rate, and most recently the Internet for All Act have all found varying degrees of success. In the case of the CASF, the program gave broadband providers the ability to receive 300 million dollars of grant money to incentivize them into building fiber optic cables in impoverished areas where providers would otherwise get low-return rates through (Ulloa). In 2016, several lawmakers reintroduced the Internet for All Now Act to allocate more funds into the CASF, ultimately facing heavy criticism because it imposed “a burden on consumers and [was] poorly managed...with some money being used to build connections in remote - but not necessarily needy - areas” (Ulloa). In the beginning of 2018, President Trump announced his plans to allocate 200 billion dollars in federal funds to upgrade utilities such as roads, bridges, and broadband networks. The proposed idea quickly sparked disagreement among Democrats and Republicans with the former believing that the latter’s plans “[to] make it easier for [broadband providers] to...install small boxes that can beam speedy wireless service…[does] not solve any of our country’s most pressing broadband infrastructure problems” (Romm). Instead, Democrats believe that a large influx of federal funds is the most surefire way to achieve fast internet connection across the nation while Republicans are unwilling to allocate the necessary funds under the argument that “mobile internet could act as a viable substitute for home broadband” (“Redefining 'Broadband'” O’Rielly qtd. in Finley). With 34% of those without easy access to the internet acknowledging a subsequent “[disadvantage] in developing new career skills or taking [school] classes,” it is vital for the right and left to agree on a resolution which will bring forth major change for the tech divide (Lee).

Recent Federal Communications Commission Actions Could Widen the Digital Gap

Despite the dire importance of legislation and programs supporting digital equity, there exists a new threat for such aid in the form of the Federal Communication Commission’s new chairman Ajit Pai. Since his appointment in 2017 by President Trump, Chairman Pai has “vowed to close the divide ‘between those who can use cutting-edge communications services and those who do not,’” yet has taken a rather roundabout path towards addressing such inequities (Vick). Pai has been incredibly skeptical of government programs such as the Lifeline and E-Rate Program choosing to oppose proposed expansions of said programs. The chairman and the Republican majority in the FCC has planned to implement changes to the Lifeline program that cut down on subsidies offered by the program along with the amount of people its available to and the number of carriers covered under the grounds that there exists “widespread abuse” of the program (“The FCC's Latest Moves” Finley). Additionally, these changes also “allow telecom companies to decommission aging DSL connections...without replacing them” which sparked concern in rural areas due to a dearth of high-speed cable internet (“The FCC's Latest Moves” Finley). In the near future, it is expected that the Republican-led FCC will vote to lower the standard of broadband coverage which according to Roberto Gallardo, a researcher at Purdue’s Center for Regional Development, “[could] reduce the motivation of broadband providers to expand service into rural communities, which already lag behind urban areas in both speed and availability of high speed internet” (“Redefining ‘Broadband’” Gallardo qtd. in Finley). As an alternative to such programs, the chairman maintains his beliefs that major broadband providers, who for the most part have set up little to no infrastructure in impoverished neighborhoods, will provide fast internet speeds to everyone (Vick). In response to the aforementioned concerns and issues, Pai has brushed them off as fear-mongering meant to belittle the capabilities of major broadband providers (Belvedere).

Deregulating Net Neutrality Worsens the Digital Divide

In addition to the government legislation and programs that can be enacted, the methods and strength in which the Federal Communications Commision regulates ISP play a vital role in the Digital Divide. Mr. Pai has long been a critic for Net Neutrality asserting that the FCC’s reclassification of it as a public utility was an attempt to “replace internet ‘freedom with government control’” (Pai qtd. in Meyer). Originally enacted in 2015 under the Obama administration, Net Neutrality is the belief that broadband providers should treat all data as equal regardless of its origin. The University of Maryland reports that “83 percent of Americans do not approve of the FCC proposal [to repeal Net Neutrality]...including 3 out of 4 Republicans” (“This poll gave Americans” Fung). Chairman Pai successfully repealed Net Neutrality in late 2017 allowing for a more “light touch regulation” (Pai qtd. in Low).

With Net Neutrality repealed, Pai is hopeful that in the future, “[the FCC’s] general regulatory approach will be a more sober one that is guided by evidence, sound economic analysis, and a good dose of humility” (Pai. qtd. in Meyer). Directly contradicting Pai’s “[vow] to close the Digital Divide],” the unbridled power that ISP have over their consumers will only serve to increase digital inequity through pay barriers for reliable and usable internet access that low-income families cannot hope to afford (Vick). The issue with the ISP newfound ability to throttle data is that it can easily be exploited for profit; by creating slow and fast lanes for internet speed, ISP can charge an exorbitant premium for fast and reliable wifi while at the same time throttling those in the slow lane to a grinding halt as incentive to pay for a more expensive package. Vijay Talati, VP of Engineering at Juniper Networks and Board Secretary of Sunday Friends, views the recent repeal of Net Neutrality as “a step in the wrong direction… [diminishing] the hopes for free internet access” while furthering the Digital Divide between socioeconomic classes (Talati). For communities filled with at-risk students, even if ISP wanted to build infrastructure in their area and offer coverage, the repeal of net neutrality ultimately gives broadband providers complete power over the price of internet coverage (LeMoult).

Digital Equity is not One Man’s Task

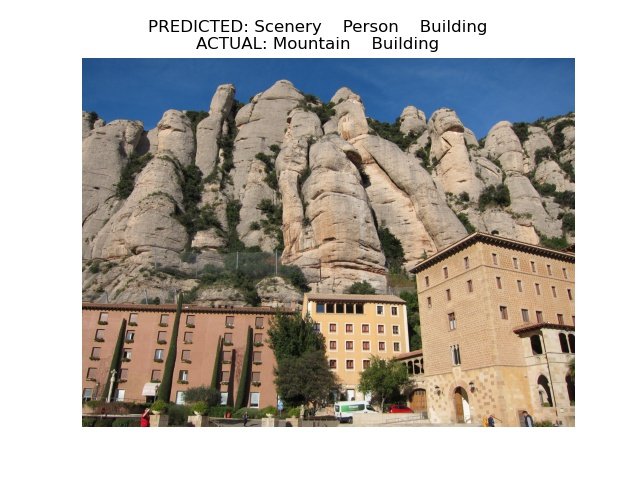

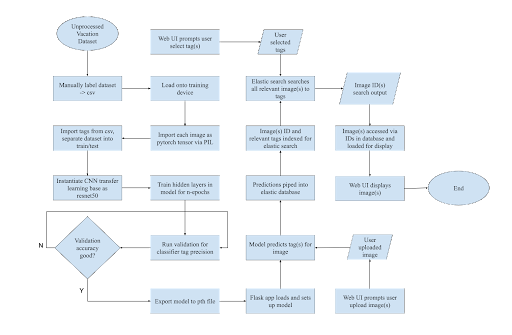

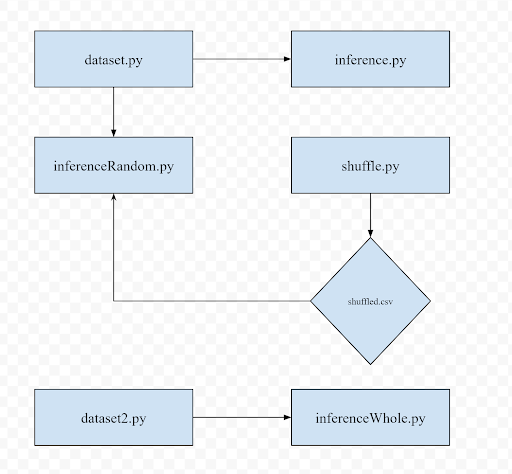

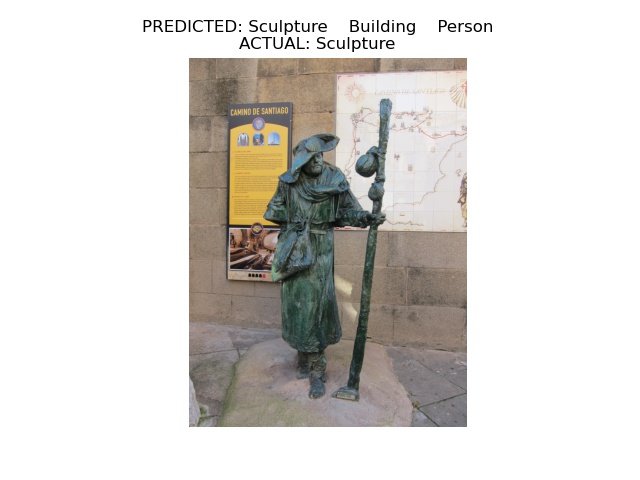

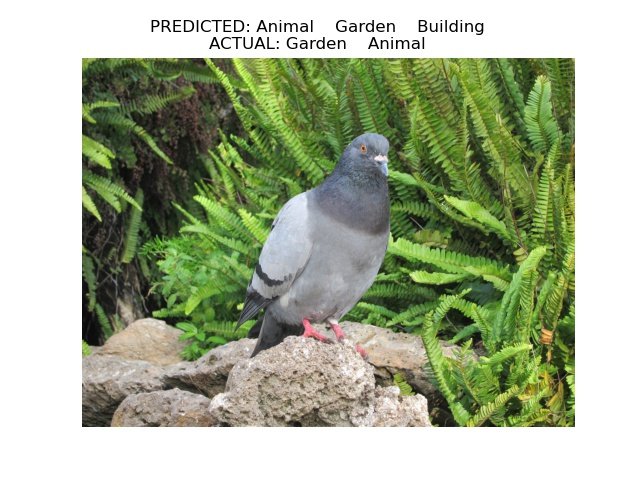

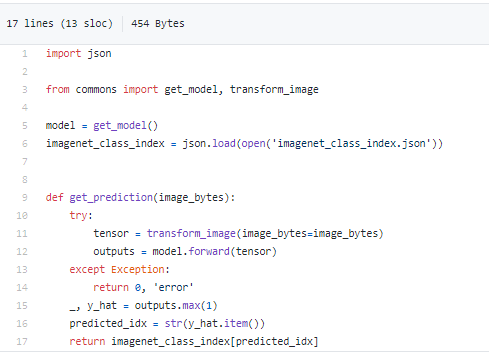

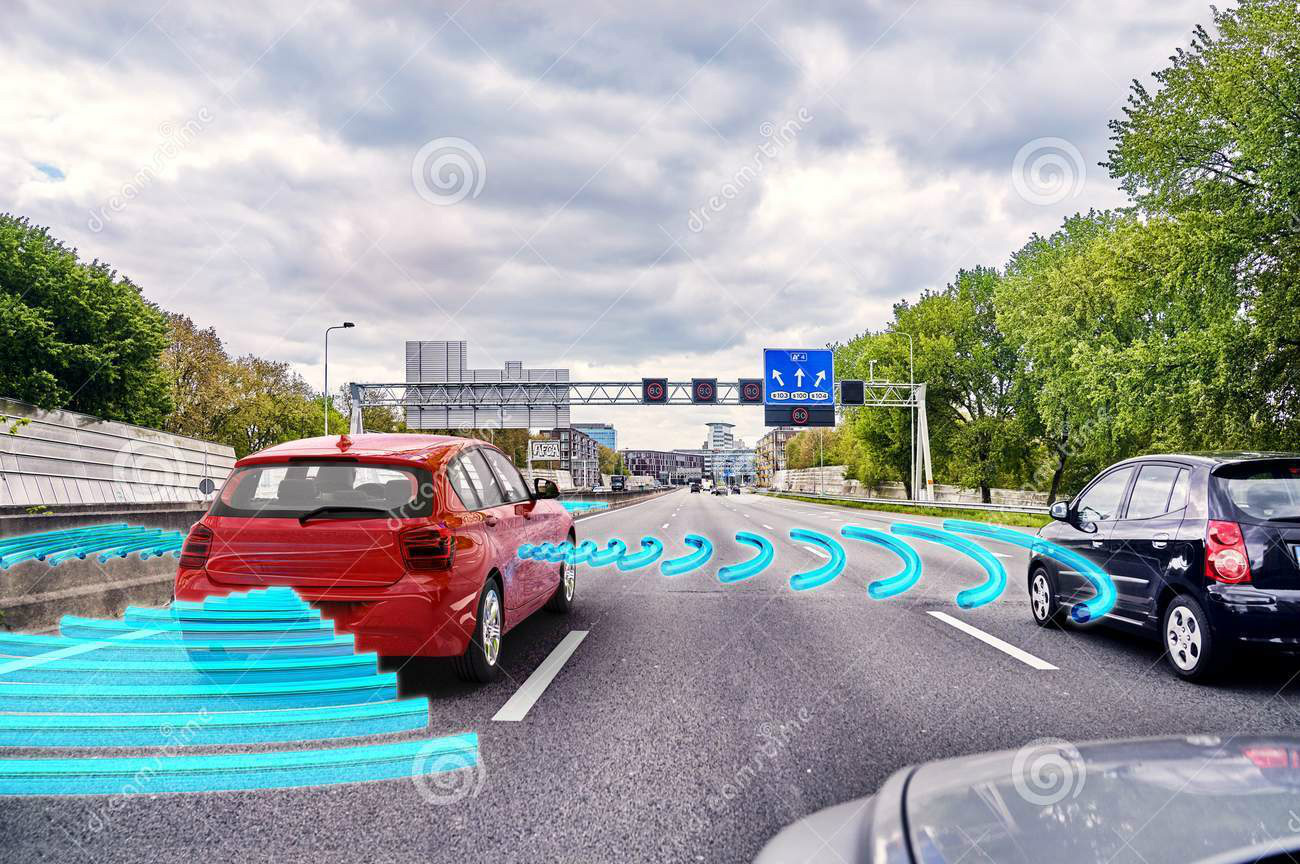

The battle for digital inequity in education is far from over. Recent advances in technology are considered a double-edged sword in that it both helps and hurts the divide. While it is widely believed that the technology boom worsens the Digital Divide leaving low-income and rural areas behind, conversely, tech innovation can also level the playing field in technology access and affordability. The quest to solve digital inequity has so far been focused on technology access, but that is only half of the equation. The missing variable is technology engagement, interest and adoption. The availability of collaborative open source software such as Linux and E-Learning platforms like Khan Academy can enable free, modern technology and education for all. Given the lack of tech interest and engagements in certain geography and diverse cognitive behaviors of students with low socioeconomic background, I also see an opportunity to develop an online teaching system that optimizes learning by teaching students in an engaging, interactive, and adaptive way so no child is left behind. Using Deep Learning, a branch of artificial intelligence and techniques such as reinforcement learning, the system could utilize predictive data analysis to determine the best method of presenting complex ideas. While artificial intelligence is at its infancy, it has the potential of closing the digital gap and enriching students engagement on a global scale. Consequently, digital equality is not one man’s task alone, its success hinges on teamwork and collaboration of school districts, communities, technologists, communities, states, and the federal government. Together, each and every constituents can explore possible solutions, whether it is individuals lobbying Congress to change the law or tech philanthropists inventing the next breakthrough in personalized learning. It is the combined efforts of many that has the potential to bring forth digital equity, one student at a time.�

Works Cited

Belvedere, Matthew J. “Trump's FCC Chair: We Didn't Break the Internet and We're Going to Make It Better.” CNBC, CNBC, 27 Feb. 2018.

Bendici, Ray. "BRIDGING the Digital Divide: OER, New Software and Business Partnerships Can Connect All Students with Educational Technology." District Administration, vol. 53, no. 10, Oct. 2017, p. 54. EBSCOhost.

Chin, Sharon. “Teenager's Project Refurbishes Laptops For Students In Need.” CBS San Francisco, 10 Aug. 2017.

Cox, Cathy. “The Digital Divide: Information Competency, Computer Literacy, and Community College Proficiencies.” The Digital Divide: Information Competency, Computer Literacy, and Community College Proficiencies | ASCCC, Academic Senate for California Community Colleges, Mar. 2009.

Finley, Klint. “Redefining 'Broadband' Could Slow Rollout in Rural Areas.” Wired, Conde Nast, 30 Aug. 2017.

Finley, Klint. “The FCC's Latest Moves Could Worsen the Digital Divide.” Wired, Conde Nast, 17 Nov. 2017.

Fung, Brian. “FCC Plan Would Give Internet Providers Power to Choose the Sites Customers See and Use.” The Washington Post, WP Company, 21 Nov. 2017.

Fung, Brian. “This Poll Gave Americans a Detailed Case for and against the FCC's Net Neutrality Plan. The Reaction among Republicans Was Striking.” The Washington Post, WP Company, 12 Dec. 2017.

Herold, Benjamin. "Poor Students Face Digital Divide in How Teachers Learn to Use Tech." Education Digest, vol. 83, no. 3, Nov. 2017, p. 16. EBSCOhost.

Huval, Rebecca. “The Digital Divide in Silicon Valley's Backyard.” The Daily Dot, 14 Aug. 2016.

Journell, Wayne. “The Inequities of the Digital Divide: Is e-Learning a Solution?” E-Learning, University of Illinois at Urbana-Champagne, vol. 4, no. 2, 2007, pp. 138–149.

Kang, Cecilia. “Bridging a Digital Divide That Leaves Schoolchildren Behind.” The New York Times, The New York Times, 22 Feb. 2016.

Kastrenakes, Jacob. “FCC Will Block States from Passing Their Own Net Neutrality Laws.” The Verge, The Verge, 22 Nov. 2017.

Kaye, Leon. “Renewables Can Narrow the Global Digital Divide.” Triple Pundit: People, Planet, Profit, 27 Feb. 2018.

Knibbs, Kate. “Obama Has a Plan to End America's Internet Access Inequality Problem.” Gizmodo, Gizmodo.com, 15 July 2015.

Lee, Seung. “California's Digital Divide Closing but New 'under-Connected' Class Emerges.” The Mercury News, The Mercury News, 27 June 2017.

LeMoult, Craig. “If Net Neutrality Is Repealed, What Will It Mean For People Who Don't Have Broadband Yet?” WGBH News, 11 Dec. 2017.

Loizos, Connie. “Steve Jurvetson on Why the Digital Divide Needs to Be Addressed Now.” TechCrunch, TechCrunch, 17 Aug. 2017.

Low, Cherlynn. “What You Need to Know about Net Neutrality (before It Gets Taken Away).” Engadget, 1 Dec. 2017.

Meyer, David. “How FCC Chair Ajit Pai Took His Fight Against Net Neutrality to the Finish Line.” Fortune, 14 Dec. 2017.

Noack, Mark. “Google Gives $800,000 for Downtown WiFi.” Mountain View Online, 4 Jan. 2017.

Newell, Traci. “LAHS Freshman Seeks Tech Donations.” Los Altos Town Crier, 12 Aug. 2015.

Newell, Traci. “MVLA Rolls out Laptop Integration This Fall.” Los Altos Town Crier, 23 July 2014.

Quaintance, Zack. “The Quest for Digital Equity.” Government Technology: State & Local Government News Articles, Mar. 2018.

Rogers, Kaleigh. “Startup Thinks Its Tethered, Internet-Beaming Blimps Can Bridge the Digital Divide.” Motherboard, 20 Feb. 2018.

Rogers, Sylvia. "Bridging the 21st Century Digital Divide." Techtrends: Linking Research & Practice to Improve Learning, vol. 60, no. 3, May 2016, pp. 197-199. EBSCOhost.

Romm, Tony. “Washington's next Big Tech Battle: Closing the Country's Digital Divide.” Recode, Recode, 17 Jan. 2018.

Talati, Vijay. “The Educational Digital Divide in a Nonprofit Context.” 11 Feb. 2018.

Ulloa, Jazmine. “California Wanted to Bridge the Digital Divide but Left Rural Areas behind. Now That's about to Change.” Los Angeles Times, Los Angeles Times, 18 Jan. 2018.

Vick, Karl. "Internet for All." Time, vol. 189, no. 13, 10 Apr. 2017, p. 34. EBSCOhost.